Watch: Considerations for Online Growth Strategy

Whether you’ve already launched your online program portfolio or are in the growth or planning stages, it's never too late to understand and evaluate the institutional and cultural qualifications required for long-term growth.

The Power of Student Testimonials

Student testimonials add SEO value to your websites and authenticity to your advertising. Get started creating student profiles today with these tips.

Enrollment Strategies and the Evolving Expectations of Students in 2024

Explore how universities are adapting to the evolving expectations of students in 2024 by using innovative enrollment strategies.

Community College Enrollment: Reducing Inefficiencies in Onboarding

Interested in how your community college enrollment team can streamline its processes and reach more students? Explore strategies for better onboarding.

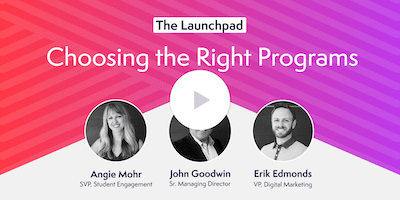

Watch: Choosing the Right Programs

Choosing the right program portfolio is a critical decision. Understand the process of research, assessing market demand, competition and saturation, and what it costs to market a program.

The Art of Persuasion and Personas in Enrollment Marketing

Discover how to harness persuasion and personas in your enrollment marketing efforts. Learn practical strategies to connect to and convert prospective students.

15 Must-Attend and Virtual Higher Education Marketing Conferences for 2024 and 2025

Explore this year’s higher education marketing conferences for a chance to network and learn how to move your marketing and enrollment strategies forward.

Tulane University and Archer Partner to Transform Online Student Experience & Operations

Archer Education is deepening our commitment to an alternative partnership approach: Online Growth Enablement. Archer helps institutions build and manage all or part of their online operations in-house, reducing or completely eliminating dependency on third-party providers or long-term contracts with Online Program Management (OPM) providers.

Change Readiness Assessment: Staying Strategic Through Constant Change

Explore the crucial role of a change readiness assessment in strategic enrollment planning for higher ed. Discover Archer’s Readiness Assessment and leadership insights.

Watch: A Good, Better, Best Approach to Online Program Growth

Whether you have an existing online program portfolio or you're just getting started in online education, this workshop is for you.

Better Conversations and Faster Decisions: Understanding Buyer Psychology

Demystify buyer psychology in higher education, and discover modern strategies to enhance engagement and help prospective students make swifter decisions.

Archer Education Announces Executive Promotions

Archer recently announced the promotion of three key members to the company’s Growth Enablement approach.